Source-Free Model Transferability Assessment for Smart Surveillance via Randomly Initialized Networks

Image credit: Unsplash

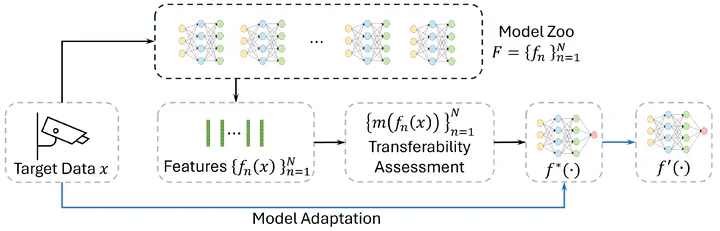

Image credit: UnsplashSmart surveillance cameras are increasingly employed for automated tasks such as event and anomaly detection within smart city infrastructures. However, the heterogeneity of deployment environments, ranging from densely populated urban intersections to quiet residential neighborhoods, renders the use of a single, universal model suboptimal. To address this, we propose the construction of a model zoo comprising models trained for diverse environmental contexts. We introduce an automated transferability assessment framework that identifies the most suitable model for a new deployment site. This task is particularly challenging in smart surveillance settings, where both source data and labeled target data are typically unavailable. Existing approaches often depend on pretrained embeddings or assumptions about model uncertainty, which may not hold reliably in real-world scenarios. In contrast, our method leverages embeddings generated by randomly initialized neural networks (RINNs) to construct task-agnostic reference embeddings without relying on pretraining. By comparing feature representations of the target data extracted using both pretrained models and RINNs, this method eliminates the need for labeled data. Structural similarity between embeddings is quantified using minibatch-Centered Kernel Alignment (CKA), enabling efficient and scalable model ranking. We evaluate our method on realistic surveillance datasets across multiple downstream tasks, including object tagging, anomaly detection, and event classification. Our embedding-level score achieves high correlations with ground-truth model rankings (relative to fine-tuned baselines), attaining Kendall’s 𝜏 values of 0.95, 0.94, and 0.89 on these tasks, respectively. These results demonstrate that our framework consistently selects the most transferable model, even when the specific downstream task or objective is unknown. This confirms the practicality of our approach as a robust, low-cost precursor to model adaptation or retraining.

A fundamental challenge in deploying machine learning models is "domain shift," where performance degrades when a model encounters data different from its training set. This problem is magnified by real-world operational constraints, specifically the "source-free, unsupervised" setting. "Source-free" means the original training data is inaccessible due to privacy or commercial confidentiality, while "unsupervised" means the new target domain has no ground-truth labels for evaluation. This dual constraint renders traditional model selection and adaptation methods ineffective.

A fundamental challenge in deploying machine learning models is "domain shift," where performance degrades when a model encounters data different from its training set. This problem is magnified by real-world operational constraints, specifically the "source-free, unsupervised" setting. "Source-free" means the original training data is inaccessible due to privacy or commercial confidentiality, while "unsupervised" means the new target domain has no ground-truth labels for evaluation. This dual constraint renders traditional model selection and adaptation methods ineffective.

We designed and developed a principled transferability assessment framework that operates effectively under these demanding conditions. The core innovation is the use of Randomly Initialized Neural Networks (RINNs) as an unbiased, task-agnostic reference. Instead of relying on potentially biased features, our framework evaluates a pretrained model’s suitability by measuring the alignment of its learnt representation structure against the RINNs' outputs on the target data. This comparison, quantified efficiently using minibatch Centered Kernel Alignment (CKA), provides a robust score to rank models without needing source data or target labels.

The RINN-based framework was rigorously evaluated on multiple real-world surveillance datasets and downstream tasks, including object tagging, event classification, and anomaly detection. It consistently outperformed state-of-the-art baselines, demonstrating high Kendall's τ correlation between its predicted model ranking and the actual ground-truth performance ranking. This work provides a robust and practical solution for the critical first step of model adaptation in privacy-sensitive and data-limited systems.