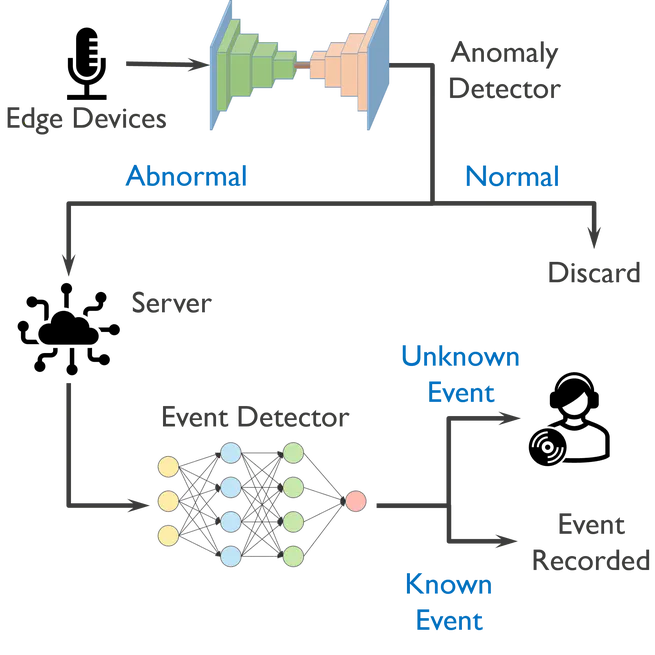

SensCity x AsaSense: Critical Analysis of Urban Acoustic Surveillance A strategic research collaboration with the SensCity project (AsaSense), utilizing city-scale raw acoustic data to expose the failure modes of standard surveillance models and proposing context-aware architectural solutions. The Research Gap & Motivation Why “Off-the-Shelf” Fails in the Wild: Most acoustic surveillance systems are validated on clean, curated datasets. However, their performance on raw, unprocessed urban audio remains largely unverified. Our Mission: In collaboration with AsaSense, we accessed a unique stream of continuous, uncurated audio from Ghent and Rotterdam. Instead of just deploying a standard model, our goal was to stress-test two dominant paradigms: anomaly detection and sound tagging, and identify why conventional paradigms fail in dynamic environments (e.g., temporal drift, open-set events), and propose robust alternatives. Operational Context (The SensCity Testbed) This project leveraged a real-world infrastructure to diagnose algorithmic limitations: Raw Data Ingestion: Unlike academic datasets, the SensCity sensor network captures the “messy” reality of cities across two years: wind noise, overlapping soundscapes, and non-stationary backgrounds. Most importantly, without any annotations. System Audit: We applied SOTA approaches on anomaly detection and sound tagging models to this raw stream. The analysis revealed that global models generate unmanageable false alarms due to contextual blindness (e.g., treating a weekend market as an anomaly because the model only knew weekday traffic), further causing operator fatigue and leading to system failure. Core Conclusion: Our experiments conclusively proved that a single global model is insufficient for city-scale deployment. Instead, Context-Specific Modeling (sensor-specific baselines) is a prerequisite for operational reliability. Proposed Resolution: Based on these findings, we formulated a Context-Aware Design Framework, advocating for sensor-specific baselines and adaptive thresholding to handle the inherent variance of city life. Core Methodologies Data Source: High-fidelity, long-term raw acoustic logs from the AsaSense deployment (Ghent & Rotterdam). Diagnosis Method: Cross-context evaluation (Spatial & Temporal Domain Shift). Algorithmic Focus: Unsupervised Deep Autoregressive Modeling (WaveNet) vs. Pre-trained Tagging Models. Architecture Design: Feasibility analysis of Hybrid Edge-Cloud pipelines to mitigate bandwidth bottlenecks. Technical Analysis & Innovations 1. Diagnosing the “Generalization Fallacy” The Problem: We demonstrated that state-of-the-art anomaly detectors suffer from severe concept drift. A model trained on “winter data” failed catastrophically during summer evenings due to changed human activity patterns. The Solution: Proposed a Context-Specific Modeling approach, proving that training lightweight, dedicated models for each sensor location significantly outperforms a massive, generic global model in anomaly retrieval. 2. The Limits of Semantic Tagging The Finding: Standard sound taggers (trained on AudioSet) struggle with the Open-Set Nature of cities. They force novel urban sounds into rigid, pre-defined categories, leading to semantic misalignment. The Proposal: Suggested moving from “rigid classification” to “unsupervised deviation detection” at the edge, using tagging only as a secondary enrichment layer in the cloud, rather than a primary filter. 3. Architectural Scalability (Edge vs. Cloud) Analysis: Analyzed the trade-off between transmission cost and detection latency. Recommendation: Proposed a “Filter-then-Forward” architecture where edge nodes perform lightweight unsupervised screening, transmitting only potential anomalies to the cloud. This reduces bandwidth consumption by orders of magnitude while preserving privacy. Outcomes & Impact Empirical Evidence: Provided one of the first comprehensive studies on the limitations of transfer learning in acoustic surveillance using real-world, longitudinal data. Design Guidelines: The findings established the foundation for Privacy-Preserved & Adaptive Surveillance, directly influencing the design of subsequent research on privacy in surveillance. Strategic Value: Delivered critical insights to the industrial partner (AsaSense) on avoiding “technical debt” by pivoting from global models to adaptive, edge-based learning. Resources Chapter 2: The AsaSense Project - Detailed analysis of deployment constraints and algorithmic failures.

Jun 30, 2021

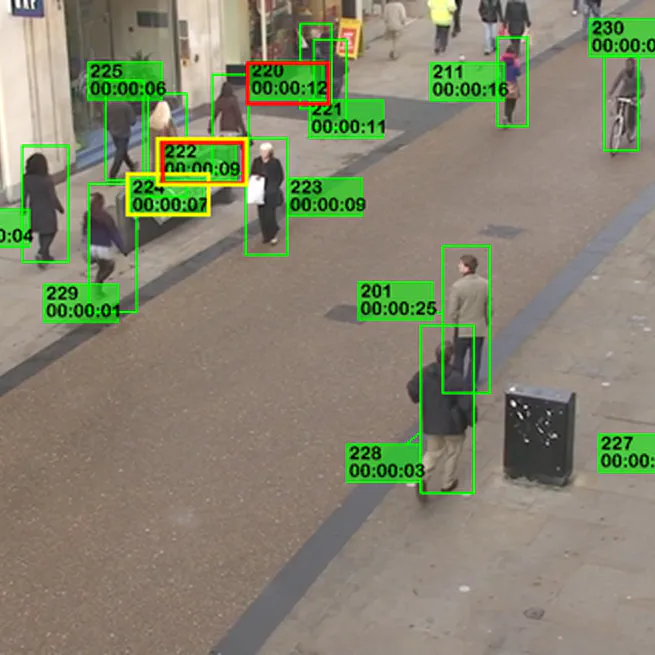

Extracting insights from chaos without labeled data. This research project focuses on the unsupervised understanding of surveillance video, tackling the full pipeline from raw pixel processing to user-centric visualization. The core analysis module leverages background modeling to extract foreground entities, constructing trajectory kinematics descriptors to capture motion patterns. By applying unsupervised clustering on these spatiotemporal features, the system automatically distinguishes between normal routines and anomalous events without requiring manual annotations. Beyond detection, my Master’s thesis addressed the challenge of information presentation. I formulated the dynamic annotation placement as a spatiotemporal optimization problem. By enforcing coherence constraints, the algorithm calculates optimal label positions that maximize readability while minimizing occlusion of critical visual information, ensuring a seamless monitoring experience. (Details and visual results to be followed)

Jun 30, 2016